A value de-duplicated cache -2

Optimizing memory through clever data structures

Previously, we figured that duplicate data in our LRU cache was eating up more than 50% of our whole heap space. Lets see where they are coming from and what can we do about it.

A simplistic view of service cache

Caching of frequently used data to increase application throughput and reduce latency is a very common pattern.

Consider a case of services talking to each other. Service A fetches certain data from service B. This obviously incurs network latency, serialization-deserialization latency and CPU work, cost due to payload transmitted over the network and so on. If we know that the data from service B is not super real-time and we can assume some level of stability or immutability of the data, say, 1 hour, then we can essentially cache service B’s response for an hour and reduce our cost, latency and improve throughput.

Of course, there are many things to consider in a cache: invalidation strategies, what to cache, how much to cache, how long to cache, architectural requirements and so on. Many of these parameters are dependent on the business logic (for example we can’t and shouldn’t cache account balance maybe?) and some are service infrastructure dependent (how much memory can we assign for the cache?). Then there is completely separate discussion of distributed cache or local cache (an architectural question).

For now, we are simply talking about in-memory cache here. A HashMap!

Implementation of an InMemory Cache

There are off-the-shelf libraries which provide ready to use in memory caches. I am talking about JVM caches; but its the same in any other language or runtime. Some of the widely used caches include Guava or Spring.

Then there is always the option of doing it in-house for better control and with the philosophy of implementing only the required features instead of bloating the service up with some external library like Guava.

A sample AOP styled cache looks like:

(Taken shamelessly from spring docs.)

@Cacheable("books")

public Book findBook(ISBN isbn) {...}

None of the default implementations will provide any de-duped strategies. And that makes sense. Deduplication comes with its own set of problems.

What is de-duped cache and why we might need it?

A cache is just a key-value store, and naturally a cache can have only unique keys. But consider a case where values are duplicated across many many keys.

A hypothetical example (probably not a great one): say we have a bunch of vendors, and the currencies they can transact in.

Now, of course, if the query pattern is something like, get all the vendors for a given currency, we would need the currency as the key.

But if we have a query pattern that says something like: get all the transact-able currencies for a given vendor, the vendorId becomes the key.

And imagine a globalized economy (where we already are, kind of), most of the vendors can transact in most currencies. So, the value part gets duplicated over here:

KEY | VALUE

------------------------------------------------

vendor1 | CAD,USD,EUR,GBP....

vendor2 | CAD,USD,EUR,GBP....

The problem is quite apparent here: duplicate data being repeated over and over again. The situation becomes worse with large number of keys with the same duplicated data.

If you are aware of JVM string DeDuplication, this is exactly the same case but above the JVM layer and inside the application.

Implementation of a Value Deduped Cache

A value DeDuped LRU cache is essentially a Cache over another cache. Simply put, we make the below change:

BEFORE:

key -> valueAFTER:

key -> hash(value)

hash(value) -> valueThis clearly reduces the number of bulky value entries to their hashes, which do not eat up as much space.

A pseudocode for such an implementation might look like:

(The below code is also available here at github gist.)

class LeastRecentlyUsedDeDupedExpirationCache<K, V> {

// To keep null values and distinguish null against cache miss

private static final Object NULL = new Object();

/**

* Ordered map so that we can implement LRU.

* This is the original LRU built on top of something like a LinkedHashMap

* This keeps the "key -> hash(value)" entries

*/

private final LeastRecentlyUsedMap<K, ExpirationValue<Integer>> map;

/**

* Map to hold dedup values. This need not be ordered as this is a map to backup the ordered

* keys stored above.

*/

private final Map<Integer, V> valueMap;

/**

* Other attributes such as capacity, ttl etc., aren't shown for brevity.

**/

public Value<V> get(final K key) {

ExpirationValue<Integer> hash;

Value<V> res = null;

synchronized (this.map) {

hash = this.map.get(key);

}

if (hash != null) {

V value = valueMap.get(hash.getValue());

if (value == null) {

/**

* This can happen when we have references like:

* key1 -> hash -> value

* key2 -> hash -> value

*

* Now, if key1 is expired, it will remove the the entries from both the maps.

* That results in having : key2 -> hash -> null

*

* To avoid this, we can do the following options

*

* 1. Keep a ref-count of the hash : key1 -> hash -> value, ref-count

* We would need to update the ref-count whenever the key is added/expired

*

* 2. Keep another map which is revert of 1st map: hash -> key

* That doesn't look so efficient

*

* 3. Just return null and make it look like the value didn't exist, and so the MethodProxyHandler will

* fill up the cache

*/

return null;

} else if (value.equals(NULL)) {

res = new DeDupedValue<>(null);

} else {

res = new DeDupedValue<>(value);

}

}

return res;

}

public void put(final K key, final V value) {

synchronized (this.map) {

// Add the value to the map.

int hash = Objects.hashCode(value);

this.map.put(key, new ExpirationValue(hash, currentTimeInMillis + this.timeToLiveInMillis));

if (value == null) {

this.valueMap.put(hash, (V) NULL);

} else {

this.valueMap.put(hash, value);

}

}

}

}

Invalidation

Since the same values are pointed to by different keys it is possible to end up in a situation where a certain key is expired, and hence the value is removed, and we end up in cache miss of other keys. For example:

k1 (expires at 10:00) -> hash(v1) -> V1

k2 (expires at 10:05) -> hash(v1) -> V1If the expiration of k1 removes v1 from the value map, fetching k2 (or any other key with the same value) will result in a cache miss.

This can be avoided if we keep a reference count for each of the values. That makes the implementation slightly complicated. In the above code, we simply do a cache miss and rely on the fact that it will get pre-filled.

Immutability Caution

While it all sounds great, one thing to consider here is immutability. If the values cached are being mutated outside of the cache, it will effect all the keys that are holding references to the value. And that will create hard to debug issues and possibly corrupt data on production.

To avoid that, we can do one or more of the following:

Ensure data immutability - do not modify the cached items

Clone the object and then return - will consume more space - also, why de-dup?

Ensure that the hash function computes a different hash for any modification to the object, and compare the hash to stored hash value before returning.

If the requirements are something of the sort of ‘every key can alter the value as it requires’, we can go ahead with the clone approach, although, it probably doesn’t make much sense on wht to do the deduplication in the first place?

To ensure the immutability of the cached values, with the 3rd approach, the get method would look like:

public Value<V> get(final K key) {

ExpirationValue<Integer> hash;

Value<V> res = null;

synchronized (this.map) {

hash = this.map.get(key);

}

if (hash != null) {

V value = valueMap.get(hash.getValue());

...

if (hash != hashFunction(value)) {

// IMMUTABILITY VIOLATED

// either force a cache-miss or throw exception

throw new ConcurrentModificationException();

}

res = new DeDupedValue<>(value);

...

}

return res;

}

Here, even if we find the value in the cache, we double check the authenticity of the value by recomputing the cache and comparing against what’s there in the keys. If there’s a mismatch, it means it got modified through some other key.

So, either we can force a cache-miss here, or we can throw an exception and let the user know what they are doing.

Improvements after deduped cache

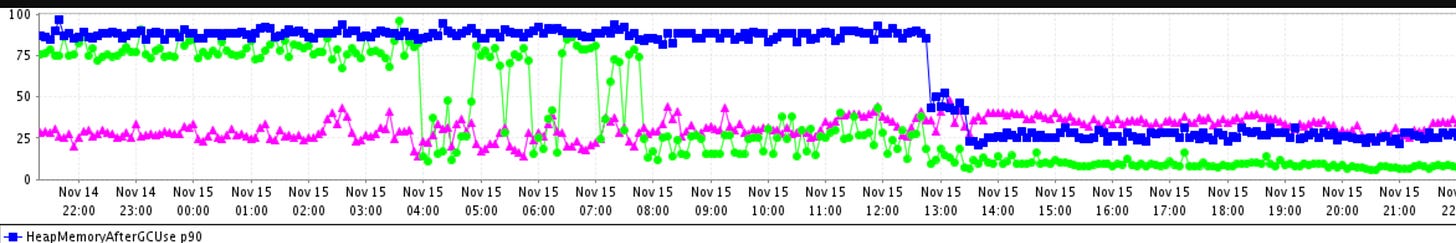

Post dedup cache, we see significant improvements in the heap space usage. Also as a side effect, no more OOM errors, and we could get the instance types down to one level cheaper, saving quite a lot of $$s.

Conclusion

JVM eco-system has some wonderful tools to help with deep dives into the internals of runtime behavior. Eclipse MAT is one such tool. Before jumping into ‘increase the heap space’ or ‘get a larger machine’, it is worthwhile to investigate which components are actually causing the issue.

As seen, some clever data structure optimizations can actually save lots in terms of performance as well as money spent on the infrastructure.